Table of Contents

- 1. Introduction

- 2. Technical Overview

- 3. Command Handling

- 4. Domain Modeling

- 5. Repositories and Event Stores

- 6. Event Processing

- 7. Managing complex business transactions

- 8. Testing

- 9. Using Spring

- 10. Performance Tuning

Axon is a lightweight framework that helps developers build scalable and extensible applications by addressing these concerns directly in the architecture. This reference guide explains what Axon is, how it can help you and how you can use it.

If you want to know more about Axon and its background, continue reading in Section 1.1, “Axon Framework Background”. If you're eager to get started building your own application using Axon, go quickly to Section 1.2, “Getting started”. If you're interested in helping out building the Axon Framework, Section 1.3, “Contributing to Axon Framework” will contain the information you require. All help is welcome. Finally, this chapter covers some legal concerns in Section 1.5, “License information”.

The demands on software projects increase rapidly as time progresses. Companies no longer accept a brochure-like homepage to promote their business; they want their (web)applications to evolve together with their business. That means that not only projects and code bases become more complex, it also means that functionality is constantly added, changed and (unfortunately not enough) removed. It can be frustrating to find out that a seemingly easy-to-implement feature can require development teams to take apart an entire application. Furthermore, today's webapplications target an audience of potentially billions of people, making scalability an indisputable requirement.

Although there are many applications and frameworks around that deal with scalability issues, such as GigaSpaces and Terracotta, they share one fundamental flaw. These stacks try to solve the scalability issues while letting developers develop applications using the layered architecture they are used to. In some cases, they even prevent or severely limit the use of a real domain model, forcing all domain logic into services. Although that is faster to start building an application, eventually this approach will cause complexity to increase and development to slow down.

The Command Query Responsiblity Segregation (CQRS) pattern addresses these issues by drastically changing the way applications are architected. Instead of separating logic into separate layers, logic is separated based on whether it is changing an application's state or querying it. That means that executing commands (actions that potentially change an application's state) are executed by different components than those that query for the application's state. The most important reason for this separation is the fact that there are different technical and non-technical requirements for each of them. When commands are executed, the query components are (a)synchronously updated using events. This mechanism of updates through events, is what makes this architecture is extensible, scalable and ultimately more maintainable.

![[Note]](images/note.png) | Note |

|---|---|

|

A full explanation of CQRS is not within the scope of this document. If you would like to have more background information about CQRS, visit the Axon Framework website: www.axonframework.org. It contains links to background information. |

Since CQRS is so fundamentally different than the layered-architecture which dominates the software landscape nowadays, it is quite hard to grasp. It is not uncommon for developers to walk into a few traps while trying to find their way around this architecture. That's why Axon Framework was conceived: to help developers implement CQRS applications while focussing on the business logic.

Axon Framework helps build scalable, extensible and maintainable applications by supporting developers apply the Command Query Responsibility Segregation (CQRS) architectural pattern. It does so by providing implementations of the most important building blocks, such as aggregates, repositories and event buses (the dispatching mechanism for events). Furthermore, Axon provides annotation support, which allows you to build aggregates and event listeners withouth tying your code to Axon specific logic. This allows you to focus on your business logic, instead of the plumbing, and helps you to make your code easier to test in isolation.

Axon does not, in any way, try to hide the CQRS architecture or any of its components from developers. Therefore, depending on team size, it is still advisable to have one or more developers with a thorough understanding of CQRS on each team. However, Axon does help when it comes to guaranteeing delivering events to the right event listeners and processing them concurrently and in the correct order. These multi-threading concerns are typically hard to deal with, leading to hard-to-trace bugs and sometimes complete application failure. When you have a tight deadline, you probably don't even want to care about these concerns. Axon's code is thoroughly tested to prevent these types of bugs.

The Axon Framework consists of a number of modules (jars) that provide the tools and components to build a scalable infrastructure. The Axon Core module provides the basic API's for the different components, and simple implemenatinos that provide solutions for single-JVM applications. The other modules address scalability or high-performance issues, by providing specialized building blocks.

Will each application benefit from Axon? Unfortunately not. Simple CRUD (Create, Read, Update, Delete) applications which are not expected to scale will probably not benefit from CQRS or Axon. Fortunately, there is a wide variety of applications that does benefit from Axon.

Applications that will most likely benefit from CQRS and Axon are those that show one or more of the following characteristics:

-

The application is likely to be extended with new functionality during a long period of time. For example, an online store might start off with a system that tracks progress of Orders. At a later stage, this could be extended with Inventory information, to make sure stocks are updated when items are sold. Even later, accounting can require financial statistics of sales to be recorded, etc. Although it is hard to predict how software projects will evolve in the future, the majority of this type of application is clearly presented as such.

-

The application has a high read-to-write ratio. That means data is only written a few times, and read many times more. Since data sources for queries are different to those that are used for command validation, it is possible to optimize these data sources for fast querying. Duplicate data is no longer an issue, since events are published when data changes.

-

The application presents data in many different formats. Many applications nowadays don't stop when showing information on a web page. Some applications, for example, send monthly emails to notify users of changes that occured that might be relevant to them. Search engines are another example. They use the same data your application does, but in a way that is optimized for quick searching. Reporting tools aggregate information into reports that show data evolution over time. This, again, is a different format of the same data. Using Axon, each data source can be updated independently of each other on a real-time or scheduled basis.

-

When an application has clearly separated components with different audiences, it can benefit from Axon, too. An example of such application is the online store. Employees will update product information and availability on the website, while customers place orders and query for their order status. With Axon, these components can be deployed on separate machines and scaled using different policies. They are kept up-to-date using the events, which Axon will dispatch to all subscribed components, regardles of the machine they are deployed on.

-

Integration with other applications can be cumbersome work. The strict definition of an application's API using commands and events makes it easier to integrate with external applications. Any application can send commands or listen to events generated by the application.

This section will explain how you can obtain the binaries for Axon to get started. There are currently two ways: either download the binaries from our website or configure a repository for your build system (Maven, Gradle, etc).

You can download the Axon Framework from our downloads page: axonframework.org/download.

This page offers a number of downloads. Typically, you would want to use the latest stable release. However, if you're eager to get started using the latest and greatest features, you could consider using the snapshot releases instead. The downloads page contains a number of assemblies for you to download. Some of them only provide the Axon library itself, while others also provide the libraries that Axon depends on. There is also a "full" zip file, which contains Axon, its dependencies, the sources and the documentation, all in a single download.

If you really want to stay on the bleeding edge of development, you can clone the

Git repository: git://github.com/AxonFramework/AxonFramework.git, or

visit https://github.com/AxonFramework/AxonFramework to browse the

sources online.

If you use maven as your build tool, you need to configure the correct dependencies for your project. Add the following code in your dependencies section:

<dependency> <groupId>org.axonframework</groupId> <artifactId>axon-core</artifactId> <version>2.2</version> </dependency>

Most of the features provided by the Axon Framework are optional and require additional dependencies. We have chosen not to add these dependencies by default, as they would potentially clutter your project with artifacts you don't need.

Axon Framework doesn't impose many requirements on the infrastructure. It has been built and tested against Java 6, making that more or less the only requirement.

Since Axon doesn't create any connections or threads by itself, it is safe to run

on an Application Server. Axon abstracts all asynchronous behavior by using

Executors, meaning that you can easily pass a container managed

Thread Pool, for example. If you don't use an Application Server (e.g. Tomcat, Jetty

or a stand-alone app), you can use the

Executors

class or the Spring

Framework to create and configure Thread Pools.

While implementing your application, you might run into problems, wonder about why certain things are the way they are, or have some questions that need an answer. The Axon Users mailinglist is there to help. Just send an email to axonframework@googlegroups.com. Other users as well as contributors to the Axon Framework are there to help with your issues.

If you find a bug, you can report them at axonframework.org/issues. When reporting an issue, please make sure you clearly describe the problem. Explain what you did, what the result was and what you expected to happen instead. If possible, please provide a very simple Unit Test (JUnit) that shows the problem. That makes fixing it a lot simpler.

Development on the Axon Framework is never finished. There will always be more features that we like to include in our framework to continue making development of scalabale and extensible application easier. This means we are constantly looking for help in developing our framework.

There are a number of ways in which you can contribute to the Axon Framework:

-

You can report any bugs, feature requests or ideas about improvemens on our issue page: axonframework.org/issues. All ideas are welcome. Please be as exact as possible when reporting bugs. This will help us reproduce and thus solve the problem faster.

-

If you have created a component for your own application that you think might be useful to include in the framework, send us a patch or a zip containing the source code. We will evaluate it and try to fit it in the framework. Please make sure code is properly documented using javadoc. This helps us to understand what is going on.

-

If you know of any other way you think you can help us, do not hesitate to send a message to the Axon Framework mailinglist.

Axon Framework is open source and freely available for anyone to use. However, if you have very specific requirements, or just want to be assured of someone to be standby to help you in case of trouble, Trifork provides several commercial support services for Axon Framework. These services include Training, Consultancy and Operational Support and are provided by the people that know Axon more than anyone else.

For more information about Trifork and its services, visit www.trifork.nl.

The Axon Framework and its documentation are licensed under the Apache License, Version 2.0. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0.

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

CQRS on itself is a very simple pattern. It only describes that the component of an application that processes commands should be separated from the component that processes queries. Although this separation is very simple on itself, it provides a number of very powerful features when combined with other patterns. Axon provides the building block that make it easier to implement the different patterns that can be used in combination with CQRS.

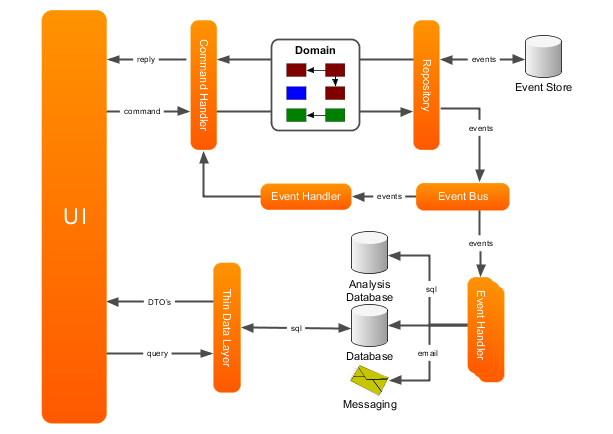

The diagram below shows an example of an extended layout of a CQRS-based event driven architecture. The UI component, displayed on the left, interacts with the rest of the application in two ways: it sends commands to the application (shown in the top section), and it queries the application for information (shown in the bottom section).

Commands are typically represented by simple and straightforward objects that contain all data necessary for a command handler to execute it. A command expresses its intent by its name. In Java terms, that means the class name is used to figure out what needs to be done, and the fields of the command provide the information required to do it.

The Command Bus receives commands and routes them to the Command Handlers. Each command handler responds to a specific type of command and executes logic based on the contents of the command. In some cases, however, you would also want to execute logic regardless of the actual type of command, such as validation, logging or authorization.

Axon provides building blocks to help you implement a command handling infrastructure with these features. These building blocks are thoroughly described in Chapter 3, Command Handling.

The command handler retrieves domain objects (Aggregates) from a repository and executes methods on them to change their state. These aggregates typically contain the actual business logic and are therefore responsible for guarding their own invariants. The state changes of aggregates result in the generation of Domain Events. Both the Domain Events and the Aggregates form the domain model. Axon provides supporting classes to help you build a domain model. They are described in Chapter 4, Domain Modeling.

Repositories are responsible for providing access to aggregates. Typically, these repositories are optimized for lookup of an aggregate by its unique identifier only. Some repositories will store the state of the aggregate itself (using Object Relational Mapping, for example), while other store the state changes that the aggregate has gone through in an Event Store. The repository is also responsible for persisting the changes made to aggregates in its backing storage.

Axon provides support for both the direct way of persisting aggregates (using object-relational-mapping, for example) and for event sourcing. More about repositories and event stores can be found in Chapter 5, Repositories and Event Stores.

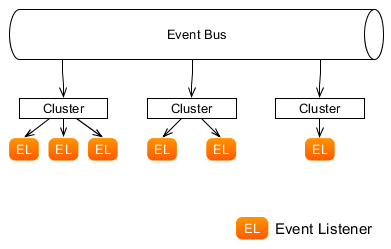

The event bus dispatches events to all interested event listeners. This can either be done synchronously or asynchronously. Asynchronous event dispatching allows the command execution to return and hand over control to the user, while the events are being dispatched and processed in the background. Not having to wait for event processing to complete makes an application more responsive. Synchronous event processing, on the other hand, is simpler and is a sensible default. Synchronous processing also allows several event listeners to process events within the same transaction.

Event listeners receive events and handle them. Some handlers will update data sources used for querying while others send messages to external systems. As you might notice, the command handlers are completely unaware of the components that are interested in the changes they make. This means that it is very non-intrusive to extend the application with new functionality. All you need to do is add another event listener. The events loosely couple all components in your application together.

In some cases, event processing requires new commands to be sent to the application. An example of this is when an order is received. This could mean the customer's account should be debited with the amount of the purchase, and shipping must be told to prepare a shipment of the purchased goods. In many applications, logic will become more complicated than this: what if the customer didn't pay in time? Will you send the shipment right away, or await payment first? The saga is the CQRS concept responsible for managing these complex business transactions.

The building blocks related to event handling and dispatching are explained in Chapter 6, Event Processing. Sagas are thoroughly explained in Chapter 7, Managing complex business transactions.

The thin data layer in between the user interface and the data sources provides a clearly defined interface to the actual query implementation used. This data layer typically returns read-only DTO objects containing query results. The contents of these DTO's are typically driven by the needs of the User Interface. In most cases, they map directly to a specific view in the UI (also referred to as table-per-view).

Axon does not provide any building blocks for this part of the application. The main reason is that this is very straightforward and doesn't differ from the layered architecture.

Axon Framework consists of a number of modules that target specific problem areas of CQRS. Depending on the exact needs of your project, you will need to include one or more of these modules.

As of Axon 2.1, all modules are OSGi compatible bundles. This means they contain the

required headers in the manifest file and declare the packages they import and export.

At the moment, only the Slf4J bundle (1.7.0 <= version < 2.0.0) is required. All

other imports are marked as optional, although you're very likely to need others, like

org.joda.time.

Axon's main modules are the modules that have been thorouhgly tested and are

robust enough to use in demanding production environments. The maven groupId of all

these modules is org.axonframework.

The Core module contains, as the name suggests, the Core components of Axon. If you use a single-node setup, this module is likely to provide all the components you need. All other Axon modules depend on this module, so it must always be available on the classpath.

The Test modules contains test fixtures that you can use to test Axon based components, such as your Command Handlers, Aggregates and Sagas. You typically do not need this module at runtime and will only need to be added to the classpath during tests.

The Distributed CommandBus modules contains a CommandBus implementation that can be used to distribute commands over multiple nodes. It comes with a JGroupsConnector that is used to connect DistributedCommandBus implementation on these nodes.

The AMQP module provides components that allow you to build up an EventBus using an AMQP-based message broker as distribution mechanism. This allows for guaranteed-delivery, even when the Event Handler node is temporarily unavailable.

The Integration module allows Axon components to be connected to Spring Integration channels. It provides an implementation for the EventBus that uses Spring Integration channels to distribute events as well as a connector to connect another EventBus to a Spring Integration channel.

MongoDB is a document based NoSQL database. The Mongo module provides an EventStore implementation that stores event streams in a MongoDB database.

Incubator modules have not undergone the same amount of testing as the main modules, are not as well documented, and may therefore not be suitable for demanding production environments. They are typically work-in-progress and may have some "rough edges". Use these modules at your own peril.

The maven groupId for incubator modules is

org.axonframework.incubator.

This module aims to provide an implementation of the EventStore that uses Cassandra as backing storage.

The Google App Engine modules provides building blocks that use specific API's provided by GAE, such as an Event Store that uses the DatastoreService and a special XStream based serializer that works around some of the limitations of the GAE platform.

To ensure maximum customizability, all Axon components are defined using interfaces. Abstract and concrete implementations are provided to help you on your way, but will nowhere be required by the framework. It is always possible to build a completely custom implementation of any building block using that interface.

Axon Framework provides extensive support for Spring, but does not require you to use Spring in order to use Axon. All components can be configured programmatically and do not require Spring on the classpath. However, if you do use Spring, much of the configuration is made easier with the use of Spring's namespace support. Building blocks, such as the Command Bus, Event Bus and Saga Managers can be configured using a single XML element in your Spring Application Context.

Check out the documentation of each building block for more information on how to configure it using Spring.

A state change within an application starts with a Command. A Command is a combination of expressed intent (which describes what you want done) as well as the information required to undertake action based on that intent. A Command Handler is responsible for handling commands of a certain type and taking action based on the information contained inside it.

The use of an explicit command dispatching mechanism has a number of advantages. First of all, there is a single object that clearly describes the intent of the client. By logging the command, you store both the intent and related data for future reference. Command handling also makes it easy to expose your command processing components to remote clients, via web services for example. Testing also becomes a lot easier, you could define test scripts by just defining the starting situation (given), command to execute (when) and expected results (then) by listing a number of events and commands (see Chapter 8, Testing). The last major advantage is that it is very easy to switch between synchronous and asynchronous command processing.

This doesn't mean Command dispatching using explicit command object is the only right way to do it. The goal of Axon is not to prescribe a specific way of working, but to support you doing it your way. It is still possible to use a Service layer that you can invoke to execute commands. The method will just need to start a unit of work (see Section 3.4, “Unit of Work”) and perform a commit or rollback on it when the method is finished.

The next sections provide an overview of the tasks related to creating a Command Handling infrastructure with the Axon Framework.

The Command Gateway is a convenient interface towards the Command dispatching mechanism. While you are not required to use a Gateway to dispatch Commands, it is generally the easiest option to do so.

There are two ways to use a Command Gateway. The first is to use the

CommandGateway interface and DefaultCommandGateway

implementation provided by Axon. The command gateway provides a number of methods that

allow you to send a command and wait for a result either synchronously, with a timeout

or asynchronously.

The other option is perhaps the most flexible of all. You can turn almost any

interface into a Command Gateway using the GatewayProxyFactory. This allows

you to define your application's interface using strong typing and declaring your own

(checked) business exceptions. Axon will automatically generate an implementation for

that interface at runtime.

Both your custom gateway and the one provided by Axon need to be configured with

at least access to the Command Bus. In addition, the Command Gateway can be

configured with a RetryScheduler and any number of

CommandDispatchInterceptors.

The RetryScheduler is capable of scheduling retries when command

execution has failed. The IntervalRetryScheduler is an implementation

that will retry a given command at set intervals until it succeeds, or a maximum

number of retries is done. When a command fails due to an exception that is

explicitly non-transient, no retries are done at all. Note that the retry scheduler

is only invoked when a command fails due to a RuntimeException. Checked

exceptions are regarded "business exception" and will never trigger a retry.

CommandDispatchInterceptors allow modification of

CommandMessages prior to dispatching them to the Command Bus. In

contrast to CommandDispatchInterceptors configured on the CommandBus,

these interceptors are only invoked when messages are sent through this gateway. The

interceptors can be used to attach meta data to a command or do validation, for

example.

The GatewayProxyFactory creates an instance of a Command Gateway

based on an interface class. The behavior of each method is based on the parameter

types, return type and declared exception. Using this gateway is not only

convenient, it makes testing a lot easier by allowing you to mock your interface

where needed.

This is how parameter affect the behavior of the CommandGateway:

-

The first parameter is expected to be the actual command object to dispatch.

-

Parameters annotated with

@MetaDatawill have their value assigned to the meta data field with the identifier passed as annotation parameter -

Parameters of type

CommandCallbackwill have theironSuccessoronFailureinvoked after the Command is handled. You may pass in more than one callback, and it may be combined with a return value. In that case, the invocations of the callback will always match with the return value (or exception). -

The last two parameters may be of types

long(orint) andTimeUnit. In that case the method will block at most as long as these parameters indicate. How the method reacts on a timeout depends on the exceptions declared on the method (see below). Note that if other properties of the method prevent blocking altogether, a timeout will never occur.

The declared return value of a method will also affect its behavior:

-

A

voidreturn type will cause the method to return immediately, unless there are other indications on the method that one would want to wait, such as a timeout or declared exceptions. -

A Future return type will cause the method to return immediately. You can access the result of the Command Handler using the Future instance returned from the method. Exceptions and timeouts declared on the method are ignored.

-

Any other return type will cause the method to block until a result is available. The result is cast to the return type (causing a ClassCastException if the types don't match).

Exceptions have the following effect:

-

Any declared checked exception will be thrown if the Command Handler (or an interceptor) threw an exceptions of that type. If a checked exception is thrown that has not been declared, it is wrapped in a

CommandExecutionException, which is aRuntimeException. -

When a timeout occurs, the default behavior is to return

nullfrom the method. This can be changed by declaring aTimeoutException. If this exception is declared, aTimeoutExceptionis thrown instead. -

When a Thread is interrupted while waiting for a result, the default behavior is to return null. In that case, the interrupted flag is set back on the Thread. By declaring an

InterruptedExceptionon the method, this behavior is changed to throw that exception instead. The interrupt flag is removed when the exception is thrown, consistent with the java specification. -

Other Runtime Exceptions may be declared on the method, but will not have any effect other than clarification to the API user.

Finaly, there is the possiblity to use annotations:

-

As specified in the parameter section, the

@MetaDataannotation on a parameter will have the value of that parameter added as meta data value. The key of the meta data entry is provided as parameter to the annotation. -

Methods annotated with

@Timeoutwill block at most the indicated amount of time. This annotation is ignored if the method declares timeout parameters. -

Classes annotated with

@Timeoutwill cause all methods declared in that class to block at most the indicated amount of time, unless they are annotated with their own@Timeoutannotation or specify timeout parameters.

public interface MyGateway { // fire and forget void sendCommand(MyPayloadType command); // method that attaches meta data and will wait for a result for 10 seconds @Timeout(value = 10, unit = TimeUnit.SECONDS) ReturnValue sendCommandAndWaitForAResult(MyPayloadType command, @MetaData("userId") String userId); // alternative that throws exceptions on timeout @Timeout(value = 20, unit = TimeUnit.SECONDS) ReturnValue sendCommandAndWaitForAResult(MyPayloadType command) throws TimeoutException, InterruptedException; // this method will also wait, caller decides how long void sendCommandAndWait(MyPayloadType command, long timeout, TimeUnit unit) throws TimeoutException, InterruptedException; } // To create an instance: GatewayProxyFactory factory = new GatewayProxyFactory(commandBus); MyGateway myGateway = factory.createInstance(MyGateway.class);

When using Spring, the easiest way to create a custom Command Gateway is by

using the CommandGatewayFactoryBean. It uses setter injection,

making it easier to configure. Only the "commandBus" property is mandatory.

<bean id="myGateway" class="org.axonframework.commandhandling.gateway.CommandGatewayFactoryBean">

<property name="commandBus" ref="commandBus"/>

<property name="gatewayInterface" value="package.to.MyGateway"/>

</bean>

The Command Bus is the mechanism that dispatches commands to their respective Command

Handler. Commands are always sent to only one (and exactly one) command handler. If no

command handler is available for a dispatched command, an exception

(NoHandlerForCommandException) is thrown. Subscribing multiple command

handlers to the same command type will result in subscriptions replacing each other. In

that case, the last subscription wins.

The CommandBus provides two methods to dispatch commands to their respective

handler: dispatch(commandMessage, callback) and

dispatch(commandMessage). The first parameter is a message

containing the actual command to dispatch. The optional second parameter takes a

callback that allows the dispatching component to be notified when command handling

is completed. This callback has two methods: onSuccess() and

onFailure(), which are called when command handling returned

normally, or when it threw an exception, respectively.

The calling component may not assume that the callback is invoked in the same

thread that dispatched the command. If the calling thread depends on the result

before continuing, you can use the FutureCallback. It is a combination

of a Future (as defined in the java.concurrent package) and Axon's

CommandCallback. Alternatively, consider using a Command

Gateway.

Best scalability is achieved when your application is not interested in the result

of a dispatched command at all. In that case, you should use the single-parameter

version of the dispatch method. If the CommandBus is fully

asynchronous, it will return immediately after the command has been successfully

dispatched. Your application will just have to guarantee that the command is

processed and with "positive outcome", sooner or later...

The SimpleCommandBus is, as the name suggests, the simplest

implementation. It does straightforward processing of commands in the thread that

dispatches them. After a command is processed, the modified aggregate(s) are saved

and generated events are published in that same thread. In most scenario's, such as

web applications, this implementation will suit your needs.

The SimpleCommandBus allows interceptors to be configured.

CommandDispatchInterceptors are invoked when a command is

dispatched on the Command Bus. The CommandHandlerInterceptors are

invoked before the actual command handler method is, allowing you to do modify or

block the command. See Section 3.5, “Command Interceptors” for more

information.

The SimpleCommandBus maintains a Unit of Work for each command published. This Unit of Work is created by a factory: the UnitOfWorkFactory. To suit any specifc needs your application might have, you can supply your own factory to change the Unit of Work implementation used.

Since all command processing is done in the same thread, this implementation is limited to the JVM's boundaries. The performance of this implementation is good, but not extraordinary. To cross JVM boundaries, or to get the most out of your CPU cycles, check out the other CommandBus implementations.

The SimpleCommandBus has reasonable performance characteristics, especially when you've gone through the performance tips in Chapter 10, Performance Tuning. The fact that the SimpleCommandBus needs locking to prevent multiple threads from concurrently accessing the same aggregate causes processing overhead and lock contention.

The DisruptorCommandBus takes a different approach to multithreaded

processing. Instead of having multiple threads each doing the same process, there

are multiple threads, each taking care of a piece of the process. The

DisruptorCommandBus uses the Disruptor (http://lmax-exchange.github.io/disruptor/), a small framework for

concurrent programming, to achieve much better performance, by just taking a

different approach to multithreading. Instead of doing the processing in the calling

thread, the tasks are handed of to two groups of threads, that each take care of a

part of the processing. The first group of threads will execute the command handler,

changing an aggregate's state. The second group will store and publish the events to

the Event Store and Event Bus.

While the DisruptorCommandBus easily outperforms the

SimpleCommandBus by a factor 4(!), there are a few limitations:

-

The DisruptorCommandBus only supports Event Sourced Aggregates. This Command Bus also acts as a Repository for the aggreates processed by the Disruptor. To get a reference to the Repository, use

createRepository(AggregateFactory). -

A Command can only result in a state change in a single aggregate instance.

-

When using a Cache, it allows only a single aggregate for a given identifier. This means it is not possible to have two aggregates of different types with the same identifier.

-

Commands should generally not cause a failure that requires a rollback of the Unit of Work. When a rollback occurs, the DisruptorCommandBus cannot guarantee that Commands are processed in the order they were dispatched. Furthermore, it requires a retry of a number of other commands, causing unnecessary computations.

-

When creating a new Aggregate Instance, commands updating that created instance may not all happen in the exact order as provided. Once the aggregate is created, all commands will be executed exactly in the order they were dispatched. To ensure the order, use a callback on the creating command to wait for the aggregate being created. It shouldn't take more than a few milliseconds.

To construct a DisruptorCommandBus instance, you need an

AggregateFactory, an EventBus and

EventStore. These components are explained in Chapter 5, Repositories and Event Stores and Section 6.1, “Event Bus”. Finally,

you also need a CommandTargetResolver. This is a mechanism that

tells the disruptor which aggregate is the target of a specific Command. There

are two implementations provided by Axon: the

AnnotationCommandTargetResolver, which uses annotations to

describe the target, or the MetaDataCommandTargetResolver, which

uses the Command's Meta Data fields.

Optionally, you can provide a DisruptorConfiguration instance,

which allows you to tweak the configuration to optimize performance for your

specific environment. Spring users can use the <axon:disruptor-command-bus>

element for easier configuration of the DisruptorCommandBus.

-

Buffer size: The number of slots on the ring buffer to register incoming commands. Higer values may increase throughput, but also cause higher latency. Must always be a power of 2. Defaults to 4096.

-

ProducerType: Indicates whether the entries are produced by a single thread, or multiple. Defaults to multiple.

-

WaitStrategy: The strategy to use when the processor threads (the three threads taking care of the actual processing) need to wait for eachother. The best WaitStrategy depends on the number of cores available in the machine, and the number of other processes running. If low latency is crucial, and the DisruptorCommandBus may claim cores for itself, you can use the

BusySpinWaitStrategy. To make the Command Bus claim less of the CPU and allow other threads to do processing, use theYieldingWaitStrategy. Finally, you can use theSleepingWaitStrategyandBlockingWaitStrategyto allow other processes a fair share of CPU. The latter is suitable if the Command Bus is not expected to be processing full-time. Defaults to theBlockingWaitStrategy. -

Executor: Sets the Executor that provides the Threads for the DisruptorCommandBus. This executor must be able to provide at least 4 threads. 3 threads are claimed by the processing components of the DisruptorCommandBus. Extra threads are used to invoke callbacks and to schedule retries in case an Aggregate's state is detected to be corrupt. Defaults to a CachedThreadPool that provides threads from a thread group called "DisruptorCommandBus".

-

TransactionManager: Defines the Transaction Manager that should ensure the storage of events and their publication are executed transactionally.

-

InvokerInterceptors: Defines the

CommandHandlerInterceptors that are to be used in the invocation process. This is the process that calls the actual Command Handler method. -

PublisherInterceptors: Defines the

CommandHandlerInterceptors that are to be used in the publication process. This is the process that stores and publishes the generated events. -

RollbackConfiguration: Defines on which Exceptions a Unit of Work should be rolled back. Defaults to a configuration that rolls back on unchecked exceptions.

-

RescheduleCommandsOnCorruptState: Indicates whether Commands that have been executed against an Aggregate that has been corrupted (e.g. because a Unit of Work was rolled back) should be rescheduled. If

falsethe callback'sonFailure()method will be invoked. Iftrue(the default), the command will be rescheduled instead. -

CoolingDownPeriod: Sets the number of seconds to wait to make sure all commands are processed. During the cooling down period, no new commands are accepted, but existing commands are processed, and rescheduled when necessary. The cooling down period ensures that threads are available for rescheduling the commands and calling callbacks. Defaults to 1000 (1 second).

-

Cache: Sets the cache that stores aggregate instances that have been reconstructed from the Event Store. The cache is used to store aggregate instances that are not in active use by the disruptor.

-

InvokerThreadCount: The number of threads to assign to the invocation of command handlers. A good starting point is half the number of cores in the machine.

-

PublisherThreadCount: The number of threads to use to publish events. A good starting point is half the number of cores, and could be increased if a lot of time is spent on IO.

-

SerializerThreadCount: The number of threads to use to pre-serialize events. This defaults to 1, but is ignored if no serializer is configured.

-

Serializer: The serializer to perform pre-serialization with. When a serializer is configured, the DisruptorCommandBus will wrap all generated events in a SerializationAware message. The serialized form of the payload and meta data is attached before they are published to the Event Store or Event Bus. This can drastically improve performance when the same serializer is used to store and publish events to a remote destination.

The Command Handler is the object that receives a Command of a pre-defined type and takes action based on its contents. In Axon, a Command may be any object. There is no predefined type that needs to be implemented.

A Command Handler must implement the CommandHandler interface. This

interface declares only a single method: Object handle(CommandMessage<T>

command, UnitOfWork uow), where T is the type of Command this Handler can

process. The concept of the UnitOfWork is explained in Section 3.4, “Unit of Work”. Be weary when using return values. Typically, it is a bad idea to use return

values to return server-generated identifiers. Consider using client-generated

(random) identifiers, such as UUIDs. They allow for a "fire and forget" style of

command handlers, where a client does not have to wait for a response. As return

value in such a case, you are recommended to simply return null.

You can subscribe and unsubscribe command handlers using the

subscribe and unsubscribe methods on

CommandBus, respectively. They both take two parameters: the type

of command to (un)subscribe the handler to, and the handler to (un)subscribe. An

unsubscription will only be successful if the handler passed as the second parameter

was currently assigned to handle that type of command. If another command was

subscribed to that type of command, nothing happens.

CommandBus commandBus = new SimpleCommandBus(); // to subscribe a handler: commandBus.subscribe(MyPayloadType.class.getName(), myCommandHandler); // we can subscribe the same handler to different command types commandBus.subscribe(MyOtherPayload.class.getName(), myCommandHandler); // we can also unsubscribe the handler from one of these types: commandBus.unsubscribe(MyOtherPayload.class.getName(), myCommandHandler); // we don't have to use the payload to identifier the command type (but it's a good default) commandBus.subscribe("MyCustomCommand", myCommandHandler);

More often than not, a command handler will need to process several types of

closely related commands. With Axon's annotation support you can use any POJO as

command handler. Just add the @CommandHandler annotation to your

methods to turn them into a command handler. These methods should declare the

command to process as the first parameter. They may take optional extra parameters,

such as the UnitOfWork for that command (see Section 3.4, “Unit of Work”). Note that for each command type, there may only be

one handler! This restriction counts for all handlers registered to the same command

bus.

![[Note]](images/note.png) | Using Spring |

|---|---|

|

If you use Spring, you can add the |

![[Caution]](images/caution.png) | Detecting ParameterResolverFactories in an OSGi container |

|---|---|

|

At this moment, OSGi support is limited to the fact that the required headers

are mentioned in the manifest file. The automatic detection of

ParameterResolverFactory instances works in OSGi, but due to classloader

limitations, it might be necessary to copy the contents of the

|

It is not unlikely that most command handler operations have an identical

structure: they load an Aggregate from a repository and call a method on the

returned aggregate using values from the command as parameter. If that is the

case, you might benefit from a generic command handler: the

AggregateAnnotationCommandHandler. This command handler uses

@CommandHandler

annotations on the aggregate's methods to

identify which methods need to be invoked for an incoming command. If the

@CommandHandler

annotation is placed on a constructor, that

command will cause a new Aggregate instance to be created.

The

AggregateAnnotationCommandHandler

still needs to know which

aggregate instance (identified by it's unique Aggregate Identifier) to load and

which version to expect. By default, the

AggregateAnnotationCommandHandler

uses annotations on the

command object to find this information. The

@TargetAggregateIdentifier

annotation must be put on a field or

getter method to indicate where the identifier of the target Aggregate can be

found. Similarly, the

@TargetAggregateVersion

may be used to

indicate the expected version.

The

@TargetAggregateIdentifier

annotation can be placed on a

field or a method. The latter case will use the return value of a method

invocation (without parameters) as the value to use.

If you prefer not to use annotations, the behavior can be overridden by

supplying a custom CommandTargetResolver. This class should return

the Aggregate Identifier and expected version (if any) based on a given command.

![[Note]](images/note.png) | Creating new Aggregate Instances |

|---|---|

|

When the

|

public class MyAggregate extends AbstractAnnotatedAggregateRoot { @AggregateIdentifier private String id; @CommandHandler public MyAggregate(CreateMyAggregateCommand command) { apply(new MyAggregateCreatedEvent(IdentifierFactory.getInstance().generateIdentifier())); } // no-arg constructor for Axon MyAggregate() { } @CommandHandler public void doSomething(DoSomethingCommand command) { // do something... } // code omitted for brevity. The event handler for MyAggregateCreatedEvent must set the id field } public class DoSomethingCommand { @TargetAggregateIdentifier private String aggregateId; // code omitted for brevity } // to generate the command handlers for this aggregate: AggregateAnnotationCommandHandler handler = AggregateAnnotationCommandHandler.subscribe(MyAggregate.class, repository); // or when using another type of CommandTargetResolver: AggregateAnnotationCommandHandler handler = AggregateAnnotationCommandHandler.subscribe(MyAggregate.class, repository, myOwnCommandTargetResolver); // to subscribe: for (String supportedCommand : handler.supportedCommands()) { commandBus.subscribe(supportedCommand, handler); } // to unsubscribe: for (String supportedCommand : handler.supportedCommands()) { commandBus.unsubscribe(supportedCommand, handler); }

![[Note]](images/note.png) | Using Spring |

|---|---|

|

If you use Spring, you can use the

|

Since version 2.2, @CommandHandler annotatations are not limited

to the aggregate root. Placing all command handlers in the root will sometimes

lead to a large number of methods on the aggregate root, while many of them

simply forward the invocation to one of the underlying entities. If that is the

case, you may place the @CommandHandler annotation on one of the

underlying entities' methods. For Axon to find these annotated methods, the

field declaring the entity in the aggregate root must be marked with

@CommandHandlingMember. Note that only the declared type of the

annotated field is inspected for Command Handlers. If a field value is null at

the time an incoming command arrives for that entity, an exception is thrown.

public class MyAggregate extends AbstractAnnotatedAggregateRoot {

@AggregateIdentifier

private String id;

@CommandHandlingMember

private MyEntity entity;

@CommandHandler

public MyAggregate(CreateMyAggregateCommand command) {

apply(new MyAggregateCreatedEvent(IdentifierFactory.getInstance().generateIdentifier()));

}

// no-arg constructor for Axon

MyAggregate() {

}

@CommandHandler

public void doSomething(DoSomethingCommand command) {

// do something...

}

// code omitted for brevity. The event handler for MyAggregateCreatedEvent must set the id field

// and somewhere in the lifecycle, a value for "entity" must be assigned to be able to accept

// DoSomethingInEntityCommand commands.

}

public class MyEntity extends AbstractAnnotatedEntity { // extends not strictly required for @CommandHandler

@CommandHandler

public void handleSomeCommand(DoSomethingInEntityCommand command) {

// do something

}

}![[Note]](images/note.png) | Note |

|---|---|

|

Note that each command must have exactly one handler in the aggregate.

This means that you cannot annotated multiple entities (either root nor

not) with @CommandHandler, when they handle the same command type. This

also means that it is not possible to annotate fields containing

collections with The runtime type of the field does not have to be exactly the declared

type. However, only the declared type of the

|

The Unit of Work is an important concept in the Axon Framework. The processing of a command can be seen as a single unit. Each time a command handler performs an action, it is tracked in the current Unit of Work. When command handling is finished, the Unit of Work is committed and all actions are finalized. This means that any repositores are notified of state changes in their aggregates and events scheduled for publication are sent to the Event Bus.

The Unit of Work serves two purposes. First, it makes the interface towards repositories a lot easier, since you do not have to explicitly save your changes. Secondly, it is an important hook-point for interceptors to find out what a command handler has done.

In most cases, you are unlikely to need access to the Unit of Work. It is mainly used

by the building blocks that Axon provides. If you do need access to it, for whatever

reason, there are a few ways to obtain it. The Command Handler receives the Unit Of Work

through a parameter in the handle method. If you use annotation support, you may add a

parameter of type UnitOfWork to your annotated method. In other locations,

you can retrieve the Unit of Work bound to the current thread by calling

CurrentUnitOfWork.get(). Note that this method will throw an exception

if there is no Unit of Work bound to the current thread. Use

CurrentUnitOfWork.isStarted() to find out if one is available.

One reason to require access to the current Unit of Work is to dispatch Events as part

of a transaction, but those Events do not originate from an Aggregate. For example, you

might want to publish an Event from a Command Handler, but don't require that Event to

be stored as state in an Event Store. In such case, you can call

CurrentUnitOfWork.get().publishEvent(event, eventBus), where

event is the Event (Message) to publish and EventBus the

Bus to publish it on. The actual publication of the message is postponed unit the Unit

of Work is committed, respecting the order in which Events have been registered.

![[Note]](images/note.png) | Note |

|---|---|

|

Note that the Unit of Work is merely a buffer of changes, not a replacement for Transactions. Although all staged changes are only committed when the Unit of Work is committed, its commit is not atomic. That means that when a commit fails, some changes might have been persisted, while other are not. Best practices dictate that a Command should never contain more than one action. If you stick to that practice, a Unit of Work will contain a single action, making it safe to use as-is. If you have more actions in your Unit of Work, then you could consider attaching a transaction to the Unit of Work's commit. See the section called “Binding the Unit of Work to a Transaction”. |

Your command handlers may throw an Exception as a result of command processing. By

default, unchecked exceptions will cause the UnitOfWork to roll back all changes. As

a result, no Events are stored or published. In some cases, however, you might want

to commit the Unif of Work and still notify the dispatcher of the command of an

exception through the callback. The SimpleCommandBus allows you to

provide a RollbackConfiguration. The RollbackConfiguration

instance indicates whether an exception should perform a rollback on the Unit of

Work, or a commit. Axon provides two implementation, which should cover most of the

cases.

The RollbackOnAllExceptionsConfiguration will cause a rollback on any

exception (or error). The default configuration, the

RollbackOnUncheckedExceptionConfiguration, will commit the Unit of

Work on checked exceptions (those not extending RuntimeException) while

still performing a rollback on Errors and Runtime Exceptions.

When using a Command Bus, the lifeycle of the Unit of Work will be automatically managed for you. If you choose not to use explicit command objects and a Command Bus, but a Service Layer instead, you will need to programatically start and commit (or roll back) a Unit of Work instead.

In most cases, the DefaultUnitOfWork will provide you with the functionality you

need. It expects Command processing to happen within a single thread. To start a new

Unit Of Work, simply call DefaultUnitOfWork.startAndGet();. This will

start a Unit of Work, bind it to the current thread (making it accessible via

CurrentUnitOfWork.get()), and return it. When processing is done,

either invoke unitOfWork.commit(); or

unitOfWork.rollback(optionalException).

Typical usage is as follows:

UnitOfWork uow = DefaultUnitOfWork.startAndGet(); try { // business logic comes here uow.commit(); } catch (Exception e) { uow.rollback(e); // maybe rethrow... }

A Unit of Work knows several phases. Each time it progresses to another phase, the UnitOfWork Listeners are notified.

-

Active phase: this is where the Unit of Work starts. Each time an event is registered with the Unit of Work, the

onEventRegisteredmethod is called. This method may alter the event message, for example to attach meta data to it. The return value of the method is the new EventMessage instance to use. -

Commit phase: before a Unit of Work is committed, the listeners'

onPrepareCommitmethods are invoked. This method is provided with the set of aggregates and list of Event Messages being stored. If a Unit of Work is bound to a transaction, theonPrepareTransactionCommitmethod is invoked. When the commit succeeded, theafterCommitmethod is invoked. If a commit failed, theonRollbackis used. This method has a parameter which defines the cause of the failure, if available. -

Cleanup phase: This is the phase where any of the resources held by this Unit of Work (such as locks) are to be released. If multiple Units Of Work are nested, the cleanup phase is postponed until the outer unit of work is ready to clean up.

The command handling process can be considered an atomic procedure; it should either be processed entirely, or not at all. Axon Framework uses the Unit Of Work to track actions performed by the command handlers. After the command handler completed, Axon will try to commit the actions registered with the Unit Of Work. This involves storing modified aggregates (see Chapter 4, Domain Modeling) in their respective repository (see Chapter 5, Repositories and Event Stores) and publishing events on the Event Bus (see Chapter 6, Event Processing).

The Unit Of Work, however, it is not a replacement for a transaction. The Unit Of Work only ensures that changes made to aggregates are stored upon successful execution of a command handler. If an error occurs while storing an aggregate, any aggregates already stored are not rolled back.

It is posssible to bind a transaction to a Unit of Work. Many CommandBus

implementations, like the SimpleCommandBus and DisruptorCommandBus, allow you to

configure a Transaction Manager. This Transaction Manager will then be used to

create the transactions to bind to the Unit of Work that is used to manage the

process of a Command. When a Unit of Work is bound to a transaction, it will ensure

the bound transaction is committed at the right point in time. It also allows you to

perform actions just before the transaction is committed, through the

UnitOfWorkListener's onPrepareTransactionCommit

method.

When creating Unit of Work programmatically, you can use the

DefaultUnitOfWork.startAndGet(TransactionManager) method to create

a Unit of Work that is bound to a transaction. Alternatively, you can initialize the

DefaultUnitOfWorkFactory with a TransactionManager to

allow it to create Transaction-bound Unit of Work.

When application components need resources at different stages of Command

processing, such as a Database Connection or an EntityManager, these resources can

be attached to the Unit of Work. By using the attachResource(String name,

Object resource) method, you attach a resource to the Unit of Work, which

can be retrieved using the getResource(String name) method. You can

also attach a resource that is also used by any nested Unit of Work using the

attachResource(String name, Object resource, boolean inherited), by

providing inherited as true.

One of the advantages of using a command bus is the ability to undertake action based on all incoming commands. Examples are logging or authentication, which you might want to do regardless of the type of command. This is done using Interceptors.

There are two types of interceptors: Command Dispatch Interceptors and Command Handler Interceptors. The former are invoked before a command is dispatched to a Command Handler. At that point, it may not even be sure that any handler exists for that command. The latter are invoked just before the Command Handler is invoked.

Command Dispatch Interceptors are invoked when a command is dispatched on a Command Bus. They have the ability to alter the Command Message, by adding Meta Data, for example, or block the command by throwing an Exception. These interceptors are always invoked on the thread that dispatches the Command.

There is no point in processing a command if it does not contain all required information in the correct format. In fact, a command that lacks information should be blocked as early as possible, preferably even before any transaction is started. Therefore, an interceptor should check all incoming commands for the availability of such information. This is called structural validation.

Axon Framework has support for JSR 303 Bean Validation based validation. This

allows you to annotate the fields on commands with annotations like

@NotEmpty and @Pattern. You need to include a JSR

303 implementation (such as Hibernate-Validator) on your classpath. Then,

configure a BeanValidationInterceptor on your Command Bus, and it

will automatically find and configure your validator implementation. While it

uses sensible defaults, you can fine-tune it to your specific needs.

![[Tip]](images/tip.png) | Tip |

|---|---|

|

You want to spend as less resources on an invalid command as possible. Therefore, this interceptor is generally placed in the very front of the interceptor chain. In some cases, a Logging or Auditing interceptor might need to be placed in front, with the validating interceptor immediately following it. |

The BeanValidationInterceptor also implements

CommandHandlerInterceptor, allowing you to configure it as a

Handler Interceptor as well.

Command Handler Interceptors can take action both before and after command processing. Interceptors can even block command processing altogether, for example for security reasons.

Interceptors must implement the CommandHandlerInterceptor interface.

This interface declares one method, handle, that takes three

parameters: the command message, the current UnitOfWork and an

InterceptorChain. The InterceptorChain is used to

continue the dispatching process.

Well designed events will give clear insight in what has happened, when and why. To use the event store as an Audit Trail, which provides insight in the exact history of changes in the system, this information might not be enough. In some cases, you might want to know which user caused the change, using what command, from which machine, etc.

The AuditingInterceptor is an interceptor that allows you to

attach arbitrary information to events just before they are stored or published.

The AuditingInterceptor uses an AuditingDataProvider

to retrieve the information to attach to these events. You need to provide the

implementation of the AuditingDataProvider yourself.

An Audit Logger may be configured to write to an audit log. To do so, you can

implement the AuditLogger interface and configure it in the

AuditingInterceptor. The audit logger is notified both on

succesful execution of the command, as well as when execution fails. If you use

event sourcing, you should be aware that the event log already contains the

exact details of each event. In that case, it could suffice to just log the

event identifier or aggregate identifier and sequence number combination.

![[Note]](images/note.png) | Note |

|---|---|

|

Note that the log method is called in the same thread as the command processing. This means that logging to slow sources may result in higher response times for the client. When important, make sure logging is done asynchronously from the command handling thread. |

Event Messages are immutable. The Unit of Work listeners allow you to alter

information in an Event Message in the onEventRegistered() method. However, in

some cases, you need to attach information to Events after all events have been

registered with the Unit of Work. Axon provides the abstract

MetaDataMutatingUnitOfWorkListenerAdapter, which allows you to

assign meta-data to Events just before the Unit of Work is committed. Simply

extend the MetaDataMutatingUnitOfWorkListenerAdapter class and implement the

abstract assignMetaData(EventMessage event, List<EventMessage> events,

int index) method. The first parameter is the EventMessage to provide

the Meta Data for. The List<EventMessage> and the

index are respectively the complete list of events part of this

commit and the index at which the first parameter can be found in the

list.

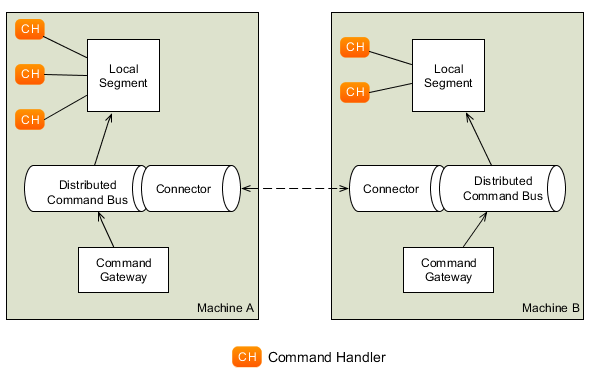

The CommandBus implementations described in Section 3.2, “The Command Bus” only allow Command Messages to be dispatched within a single JVM. Sometimes, you want multiple instances of Command Buses in different JVM's to act as one. Commands dispatched on one JVM's Command Bus should be seamlessly transported to a Command Handler in another JVM while sending back any results.

That's where the DistributedCommandBus comes in. Unlike the other

CommandBus implementations, the DistributedCommandBus does

not invoke any handlers at all. All it does is form a "bridge" between Command Bus

implementations on different JVM's. Each instance of the

DistributedCommandBus on each JVM is called a "Segment".

![[Note]](images/note.png) | Dependencies |

|---|---|

|

The distributed command bus is not part of the Axon Framework Core module, but in the axon-distributed-commandbus module. If you use Maven, make sure you have the appropriate dependencies set. The groupId and version are identical to those of the Core module. |

The DistributedCommandBus relies on two components: a

CommandBusConnector, which implements the communication protocol

between the JVM's, and the RoutingStrategy, which provides a Routing Key

for each incoming Command. This Routing Key defines which segment of the Distributed

Command Bus should be given a Command. Two commands with the same routing key will

always be routed to the same segment, as long as there is no change in the number and

configuration of the segments. Generally, the identifier of the targeted aggregate is

used as a routing key.

Two implementations of the RoutingStrategy are provided: the

MetaDataRoutingStrategy, which uses a Meta Data property in the Command

Message to find the routing key, and the AnnotationRoutingStrategy, which

uses the @TargetAggregateIdentifier annotation on the Command Messages

payload to extract the Routing Key. Obviously, you can also provide your own

implementation.

By default, the RoutingStrategy implementations will throw an exception when no key can be resolved from a Command Message. This behavior can be altered by providing a UnresolvedRoutingKeyPolicy in the constructor of the MetaDataRoutingStrategy or AnnotationRoutingStrategy. There are three possible policies:

-

ERROR: This is the default, and will cause an exception to be thrown when a Routing Key is not available

-

RANDOM_KEY: Will return a random value when a Routing Key cannot be resolved from the Command Message. This effectively means that those commands will be routed to a random segment of the Command Bus.

-

STATIC_KEY: Will return a static key (being "unresolved") for unresolved Routing Keys. This effectively means that all those commands will be routed to the same segment, as long as the configuration of segments does not change.

The JGroupsConnector uses (as the name already gives away) JGroups as

the underlying discovery and dispatching mechanism. Describing the feature set of

JGroups is a bit too much for this reference guide, so please refer to the JGroups User Guide for more

details.

The JGroupsConnector has four mandatory configuration elements:

-

The first is a JChannel, which defines the JGroups protocol stack. Generally, a JChannel is constructed with a reference to a JGroups configuration file. JGroups comes with a number of default confgurations which can be used as a basis for your own configuration. Do keep in mind that IP Multicast generally doesn't work in Cloud Services, like Amazon. TCP Gossip is generally a good start in such type of environment.

-

The Cluster Name defines the name of the Cluster that each segment should register to. Segments with the same Cluster Name will eventually detect eachother and dispatch Command among eachother.

-

A "local segment" is the Command Bus implementation that dispatches Commands destined for the local JVM. These commands may have been dispatched by instances on other JVM's or from the local one.

-

Finally, the Serializer is used to serialize command messages before they are sent over the wire.

Ultimately, the JGroupsConnector needs to actually connect, in order to dispatch

Messages to other segments. To do so, call the connect() method. It

takes a single parameter: the load factor. The load factor defines how much load,

relative to the other segments this segment should receive. A segment with twice the

load factor of another segment will be assigned (approximately) twice the amount of

routing keys as the other segments. Note that when commands are unevenly distributed

over the rouing keys, segments with lower load factors could still receive more

command than a segment with a higher load factor.

JChannel channel = new JChannel("path/to/channel/config.xml"); CommandBus localSegment = new SimpleCommandBus(); Serializer serializer = new XStreamSerializer(); JGroupsConnector connector = new JGroupsConnector(channel, "myCommandBus", localSegment, serializer); DistributedCommandBus commandBus = new DistributedCommandBus(connector); // on one node: connector.connect(50); commandBus.subscribe(CommandType.class.getName(), handler); // on another node with more CPU: connector.connect(150); commandBus.subscribe(CommandType.class.getName(), handler); commandBus.subscribe(AnotherCommandType.class.getName(), handler2); // from now on, just deal with commandBus as if it is local...

![[Note]](images/note.png) | Note |

|---|---|

|

Note that it is not required that all segments have Command Handlers for the same type of Comands. You may use different segments for different Command Types altogether. The Distributed Command Bus will always choose a node to dispatch a Command to that has support for that specific type of Command. |

If you use Spring, you may want to consider using the

JGroupsConnectorFactoryBean. It automatically connects the

Connector when the ApplicationContext is started, and does a proper disconnect

when the ApplicationContext is shut down. Furthermore, it uses

sensible defaults for a testing environment (but should not be considered

production ready) and autowiring for the configuration.

In a CQRS-based application, a Domain Model (as defined by Eric Evans and Martin Fowler) can be a very powerful mechanism to harness the complexity involved in the validation and execution of state changes. Although a typical Domain Model has a great number of building blocks, two of them play a major role when applied to CQRS: the Event and the Aggregate.

The following sections will explain the role of these building blocks and how to implement them using the Axon Framework.

Events are objects that describe something that has occurred in the application. A typical source of events is the Aggregate. When something important has occurred within the aggregate, it will raise an Event. In Axon Framework, Events can be any object. You are highly encouraged to make sure all events are serializable.

When Events are dispatched, Axon wraps them in a Message. The actual type of Message

used depends on the origin of the Event. When an Event is raised by an Aggregate, it is

wrapped in a DomainEventMessage (which extends EventMesssage).

All other Events are wrapped in an EventMessage. The

EventMessage contains a unique Identifier for the event, as well as a

Timestamp and Meta Data. The DomainEventMessage additionally contains the

identifier of the aggregate that raised the Event and the sequence number which allows

the order of events to be reproduced.

Even though the DomainEventMessage contains a reference to the Aggregate Identifier, you should always include that identifier in the actual Event itself as well. The identifier in the DomainEventMessage is meant for the EventStore and may not always provide a reliable value for other purposes.

The original Event object is stored as the Payload of an EventMessage. Next to the payload, you can store information in the Meta Data of an Event Message. The intent of the Meta Data is to store additional information about an Event that is not primarily intended as business information. Auditing information is a typical example. It allows you to see under which circumstances an Event was raised, such as the User Account that triggered the processing, or the name of the machine that processed the Event.

![[Note]](images/note.png) | Note |

|---|---|

|

In general, you should not base business decisions on information in the meta-data of event messages. If that is the case, you might have information attached that should really be part of the Event itself instead. Meta-data is typically used for auditing and tracing. |

Although not enforced, it is good practice to make domain events immutable, preferably by making all fields final and by initializing the event within the constructor.

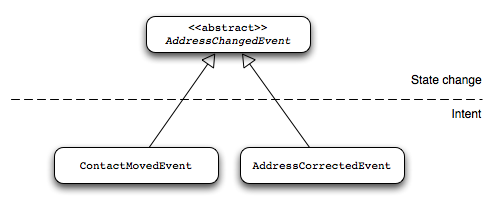

![[Note]](images/note.png) | Note |

|---|---|

|

Although Domain Events technically indicate a state change, you should try to

capture the intention of the state in the event, too. A good practice is to use an

abstract implementation of a domain event to capture the fact that certain state has

changed, and use a concrete sub-implementation of that abstract class that indicates

the intention of the change. For example, you could have an abstract

|

When dispatching an Event on the Event Bus, you will need to wrap it in an (Domain)

Event Message. The GenericEventMessage is an implementation that allows you

to wrap your Event in a Message. You can use the constructor, or the static

asEventMessage() method. The latter checks whether the given parameter

doesn't already implement the Message interface. If so, it is either

returned directly (if it implements EventMessage,) or it returns a new

GenericEventMessage using the given Message's payload and

Meta Data.

An Aggregate is an entity or group of entities that is always kept in a consistent state. The aggregate root is the object on top of the aggregate tree that is responsible for maintaining this consistent state.

![[Note]](images/note.png) | Note |

|---|---|

|

The term "Aggregate" refers to the aggregate as defined by Evans in Domain Driven Design: “A cluster of associated objects that are treated as a unit for the purpose of data changes. External references are restricted to one member of the Aggregate, designated as the root. A set of consistency rules applies within the Aggregate's boundaries. ” A more extensive definition can be found on: http://domaindrivendesign.org/freelinking/Aggregate. |

For example, a "Contact" aggregate could contain two entities: Contact and Address. To keep the entire aggregate in a consistent state, adding an address to a contact should be done via the Contact entity. In this case, the Contact entity is the appointed aggregate root.

In Axon, aggregates are identified by an Aggregate Identifier. This may be any object, but there are a few guidelines for good implementations of identifiers. Identifiers must:

-

implement equals and hashCode to ensure good equality comparison with other instances,

-

implement a toString() method that provides a consistent result (equal identifiers should provide an equal toString() result), and

-

preferably be serializable.

The test fixtures (see Chapter 8, Testing) will verify these

conditions and fail a test when an Aggregate uses an incompatible identifier.

Identifiers of type String, UUID and the numeric types are

always suitable.

![[Note]](images/note.png) | Note |

|---|---|

|

It is considered a good practice to use randomly generated identifiers, as opposed to sequenced ones. Using a sequence drastically reduces scalability of your application, since machines need to keep each other up-to-date of the last used sequence numbers. The chance of collisions with a UUID is very slim (a chance of 10−15, if you generate 8.2 × 10 11 UUIDs). Furthermore, be careful when using functional identifiers for aggregates. They have a tendency to change, making it very hard to adapt your application accordingly. |

In Axon, all aggregate roots must implement the

AggregateRoot

interface. This interface describes the basic operations needed by the

Repository to store and publish the generated domain events. However, Axon

Framework provides a number of abstract implementations that help you writing

your own aggregates.

![[Note]](images/note.png) | Note |

|---|---|

|

Note that only the aggregate root needs to implement the

|

The AbstractAggregateRoot is a basic implementation that provides

a registerEvent(DomainEvent) method that you can call in your

business logic method to have an event added to the list of uncommitted events.

The AbstractAggregateRoot will keep track of all uncommitted

registered events and make sure they are forwarded to the event bus when the

aggregate is saved to a repository.

Axon framework provides a few repository implementations that can use event

sourcing as storage method for aggregates. These repositories require that

aggregates implement the

EventSourcedAggregateRoot

interface. As with

most interfaces in Axon, we also provide one or more abstract implementations to

help you on your way.

The interface

EventSourcedAggregateRoot

defines an extra method,

initializeState(), on top of the

AggregateRoot

interface. This method initializes an aggregate's state based on an event

stream.

Implementations of this interface must always have a default no-arg constructor. Axon Framework uses this constructor to create an empty Aggregate instance before initialize it using past Events. Failure to provide this constructor will result in an Exception when loading the Aggregate from a Repository.

The AbstractEventSourcedAggregateRoot implements all methods on